• Objectives

• Testing in Software Lifecycle(Software Developmental Models)

• What is Testing Phase?

• Different Types of Testing Phases

• Objective of Testing Phases

• Traceability Matrix

• Software Development Models

- Software Development Life Cycle or SDLC is a model of a detailed plan on how to create, develop, implement and eventually fold the software.

- It’s a complete plan outlining how the software will be born, raised and eventually be retired from its function.

- Although some of the models don’t explicitly say how the program will be folded, it’s already common knowledge that software will eventually have it’s ending in a never-ending world of change web, software and programming technology.

• Waterfall model

- The waterfall model adopts a 'top down' approach regardless of whether it is being used for software development or testing.

- Development broken into series of sequential stages Also called linear sequential model

- One of the earliest models developed for large projects

- Testing also follows the same sequence

- In this methodology, you move on to the next step only after you have completed the present step.

- There is no scope for jumping backward or forward or performing two steps simultaneously.

- Also, this model follows a non-iterative approach. The main benefit of this methodology is its simplistic, systematic and orthodox approach.

- However, it has many shortcomings since bugs and errors in the code are not discovered until and unless the testing stage is reached. This can often lead to wastage of time, money and valuable resources.

This model follows a sequential flow, where the progress is like that of waterfall from one step to another.

• Waterfall model

• Waterfall model

- Requirement Gathering and Analysis: In this phase, the requirements of the client are gathered, and an analysis of the same conducted, using which requirement specification document is created. This document is used as the base for creating the system.

- Design: This is an important phase, where the entire software is designed, taking the software requirement specifications into consideration. The system architecture along with the hardware and software specifications required are worked upon.

- Implementation: After the design stage comes to the implementation stage, where the code for the software is written. When the modules are ready, unit tests are carried out on them, which helps in checking, if there are problems with the software. In case of defects, the code is fixed, before proceeding to the next stage.

- Testing and Debugging: After the software has been developed completely, it is passed onto the testers. They run different tests on the software and the defects if any are fixed.

- Delivery: Once the software has been passed forward, after debugging, starts the implementation of the software at the client side. The client is given a thorough insight into the software, so he is able to use the software.

- Maintenance: After the software has been installed, the client uses the software and may ask for changes. The changes and general maintenance of the software are taken care of in this phase.

• Waterfall model –Software Testing Methodology

• Requirement analysis - Test Initiation

• Test Planning/Test case design

• Test Execution

• Test Sign-Off

Iterative development model

• Iterative development is the process of establishing requirements, designing, building and testing a system, done as a series of smaller developments

• More realistic approach to development of large-scale systems and software

• Each increment is fully tested before beginning the next

• Total planned testing effort would be more than that for waterfall model

• Few Concerns while adopting WF Model !!!

• Typically, more time than was initially scheduled is needed to integrate subsystems into a complete, working application. This squeezes the time allocated to testing, always the first item to be cut.

• Design flaws that require significant changes to the product are discovered late in the software cycle.

• The project’s requirements must be stated and frozen at the first stages of the development process. Often, the project’s stakeholders don’t completely understand the business and product requirements at the beginning of the project. Testing to not relevant or outdated requirements is a waste of time.

• The traditional practice allows a single review process to finalize each project stage.

• By excluding the testing organization from the design and development process, the opportunity to leverage the input of those with a quality-focused mindset is lost.

• Iterative development model

• Iterative development model

• The Requirements Phase

As with any lifecycle model, during the requirements analysis phase the quality team is engaged in test planning and design. This is nothing new. What would be different from the waterfall model is the vision of how the test plan design will be applied later in the project. For example, the waterfall team would be planning an integration and system test as the last steps to complete before software release. The iterative team will be preparing for an integration test at the first opportunity. As architecture is selected and the first components are created, the testing team is working with development on scaffolding code and test harnesses. The goal would be to reuse these elements throughout the project life--although this may not always be practical or possible. Continual focus on reuse of test scripts and related artifacts allows for these activities to pay for themselves in later iterations.

• The Design Phase

In the design phase, software architecture and components to meet the requirements are designed. Testing activity here begins to focus on the most atomic element--the unit. In the first iteration of a project, there may not be much to do here. In subsequent iterations, the focus will be on how new or changed components will affect the system--regression testing. This is where the use of test automation begins to pay off. The tests performed in the previous iteration should be applied here again to the changes and/or additions.

• The Implementation Phase

In all iterations, the implementation phase will be saturated with testing. Additional time will be required in early cycles, as the bulk of the test creation and coding will be performed then. A well-designed automated test will have a lifecycle parallel to, and should be considered a sibling of, the project source code.

• Iterative development model

• Testing

- Testing must begin "in the small" and progresses "to the large" to avoid wasting development time and resources. It is a systemic process that begins with the unit test, where it is confirmed that the software functions as a unit.

- Next, the components are assembled and integrated to form the complete software package. Integration testing then addresses the issues associated with verification and program construction. When it has been determined that all the components of the software package are present and functional, validation testing can begin to provide assurance that the software meets the functional requirements established for this iteration.

- Only after each these plateaus have been reached, can system testing begin. System testing provides verification that all of the disparate, constituent objects of the application mesh properly and that overall system function and performance can be achieved.

• Review Phase

- The review phase is when the software is evaluated, the current requirements are reviewed, and changes and additions to requirements proposed.

- It is in this phase that one begins to see the rewards of the previous labors and the reuse of test automation.

- The reporting and analysis components of the testing tools should be utilized heavily here to provide documentation that the software is, or is not meeting the goals of the iteration.

• Rapid Application Development (RAD)

• Emphasizes on extremely short development cycle

• Requirements are well understood

• Creates a fully functional system in very short time

• Achieved by component-based construction

• Since well-tested components are used, they will be only integration tested when they are used

• The total planned testing effort will be reduced as compared that to other models

• Attempts to minimize risk by developing software in short time boxes called iterations

• Each iteration is like a miniature software project of its own

• Includes all the tasks necessary to release the mini increment of new functionality: planning, requirements analysis, design, coding, testing and documentation

• Agile methods emphasize real-time communication, preferably face-to-face overwritten documents

• Each iteration will be fully tested before proceeding

• The total planned testing efforts will be more than that for waterfall model

• Rational Unified Process (RUP)

• Is an execution strategy

• Not a development methodology

• Goal is to ensure the production of high-quality software that meets the needs of its end-users, within a predictable schedule and budget

• Achieved by following means:

• Disciplined approach for allocating roles and responsibilities

• Easy access to a knowledge base which combines the experience base of

companies

• Common communication language (UML –Unified Modeling Language)

• Models created and maintained rather than voluminous documentation

Testing within a Life Cycle Model

• Testing within a Life Cycle Model

• Testing within a Life Cycle Model

• Benefits of Early Testing

- Testers should be involved in reviewing documents as soon as drafts are available in the development life cycle

- Early test design leads to finding defects early and hence its cheaper to fix defects

- Prevents defect multiplication

- Prevents defect to leak into next stage

- Better quality, less time in running tests because fewer defects found, reduced costs

• Benefits of Early Testing

- Testers should be involved in reviewing documents as soon as drafts are available in the development life cycle

- Early test design leads to finding defects early and hence its cheaper to fix defects

- Prevents defect multiplication

- Prevents defect to leak into next stage

- Better quality, less time in running tests because fewer defects found, reduced costs

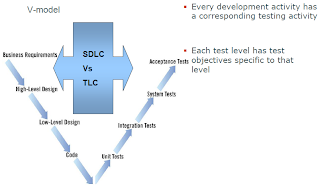

• V&V vs. Waterfall

- The main difference between waterfall model and V model is that in waterfall model, the testing activities are carried out after the development activities are over. On the other hand in V model, testing activities start with the first stage itself.

- In other words, waterfall model is a continuous process, while the V model is a simultaneous process. As compared to a software made using waterfall model, the number of defects in the software made using V model are less. This is due to the fact, that there are testing activities, which are carried out simultaneously in V model.

- Therefore, waterfall model is used, when the requirements of the user are fixed. If the requirements of the user are uncertain and keep changing, then V model is the better alternative.

• Testing Phases

What is Testing Phase?

Testing Phases are the phases in which comprehensive testing activity is carried out across various levels of software development lifecycle.

“The testing phase indicates the maturity of the company, quality of design, development and testing processes”

The testing phase of a project constitutes a wide range of opportunities to improve processes within the company.

The awareness of this potential at the initial stage and its use can serve as a strategic asset that ensures the success of the projects.

• Testing Phases

What are different Testing Phases?

Ø

Ø Test Planning

Ø Test Design

Ø Test Execution

Test Sign-Off

• Testing Phases

• Testing Phases

• Testing Phases Objective

- Record, organize and guide testing activities

- Schedule testing activities according to the test strategy and project deadlines

- Describe the way to show confidence to customers

- Provide a basis for re-testing during system maintenance

- Provide a basis for evaluating and improving testing process

- Provide Testing Efficiency by providing various metrics reports.

• Testing Phases

1) Test Initiation -

It is the first phase of Software Testing Life Cycle(STLC). It mainly involves the starting formalities when

a project comes to an organization. The tasks include

• Analyze scope of project, Prepare Contract, Review of Contract,

Release.

• Identify Test Requirements and risk assessment.

• Identify Resources.

2) Test Planning - Here, the planning of testing process is done. -

Generate Test plans & Schedule the testing process.

3)Test Design -

• Setup test environment.

• High-level test plan.

• Design Test Cases define expected results.

• Decide if any set of test cases to be automated.

• Prepare Traceability matrix.

• Testing Phases

4) Test Execution –

In this phase, test execution is the main task.

• Testing - Initial test cycles, bug fixes, and re-testing.

• Final Testing and Implementation.

• Setup database to track components of the automated testing system i.e. reusable modules.

• Verify results.

• Defect management.

5) Test Sign-Off –

It is the last phase of Software Testing Life Cycle. The project is sent for User acceptance and if everything goes fine, the Software Testing project is signed off.

• Client Acceptance.

Client Sign-off

• Test Initiation

Ø To determine

• Exact requirement of product or system or project deliverables should be developed to meet the customer’s needs

• Cost analysis to complete the financial picture

• ROI (Return on Investment) Analysis

• High level design, review resource plan and milestone schedule

Ø At the end of the phase, prepare checklist, reviews project deliverables with management to take a decision whether to proceed with the project or not.

Ø Test Initiation cover the following

• Understanding requirements

• Transition schedule and methodology

• Scope definition

• What are the objective and goals?

• What’s the schedule for testing activities?

• Resource requirements

• What are the cost involved?

• Test Initiation

Ø Testing consists of Verification and Validation activities. The initiation phase falls under Test Verification activity.

Ø Test Verification Activities involves

• Use case verification

• Requirements verification

• Functional design verification

These activities support the Defect Prevention and Quality control Testing strategies

• Test Planning

Ø Test Plan provides all approach/direction for intended testing

Plan Your Work and Work your Plan…….

Ø Provides overall approach/direction for the intended testing.

Ø Defines the boundaries and WBS for testing.

Work Breakdown Structure –whether in scope or outside scope

Ø Helps mitigate adverse impacts on achieving objectives.

Ø Defines how the process is managed, monitored and controlled.

Ø Planning is ‘preparing for the future’ whereas plan implementation is ‘making sure we have what we want when we get there’

• Test Planning

• Test Planning

Ø Test Planning covers the following-

• Estimation of the test efforts

• Schedule testing activities

• Preparation of Test Plan and test cases

• Develop test cases, automation scripts

• Test Data creation

Ø Test Planning Deliverables –

• Test Strategy

• Test Plan

• Initial Effort Estimation

• Test Design

Ø Test Design illustrates the Test Architecture that will be used in Test Program.

Ø The objective is to organize the test cases properly.

Good Test Design leads to Successful tests.

Ø Design activity generally split into two, analysis and actual design of test case

Ø Requirement and Design Walkthrough are carried out -informal

Ø Internal / External Review of Test Specs -formal

• Test Design

Ø The purpose of a test design technique is to identify test conditions and test cases.

Ø Test Cases must be distinguished

• Black Box

• White Box

Black Box Techniques:

Way to derive and select test conditions or test cases based on an analysis of the test basis documentation and the experience of developers, testers, and users for a component or system without reference to its internal structure

White Box Techniques:

Based on an analysis of the structure of the component or system.

• Test Design

Ø The purpose of a test design technique is to identify test conditions and test cases.

Ø Test Cases must be distinguished

• Black Box

• White Box

Black Box Techniques:

Way to derive and select test conditions or test cases based on an analysis of the test basis documentation and the experience of developers, testers, and users for a component or system without reference to its internal structure

White Box Techniques:

Based on an analysis of the structure of the component or system.

Specification-based techniques:

Structure-based techniques:

Experience-based techniques:

• Test Design

Ø Test Design Deliverables –

• Requirement Traceability Matrix

• Functional Test cases based on use cases/ requirements

• Test coverage report

• Interface Test cases

• Test Maintenance/CM (Configuration Manager )procedure

• Test Environment and Test Data requirement

• Test Execution

Ø Test Execution begins once the Test Plan is accepted and the entry criteria for Execution (Test Spec, environment, scripts etc) are satisfied.

Ø This phase involves actual testing. The testers execute the documented test cases and capture the results in a test report or any other tool.

Ø During Test Execution progresses, the test schedule should be updated according to test scripts or cases have passed and failed, which cannot be run due to dependencies on other things and which need to be re-tested as a result of failure and/or defects.

• Test Execution

Ø This phase involves executing manual and automatic tests, recording test results and reporting possible defects to the rest of the project team.

Ø This is repeated according to development iterations, during which new tests are performed, fixes are re-tested, and regression tests are performed.

Ø Test Execution Levels

• Unit Testing

• Integration Testing

• System Testing

• Acceptance Testing

Ø Test Execution Depends on

• Test Environment

• Test Data

• Test Tools

• Test Execution

Ø This phase involves executing manual and automatic tests, recording test results and reporting possible defects to the rest of the project team.

Ø This is repeated according to development iterations, during which new tests are performed, fixes are re-tested, and regression tests are performed.

Ø Test Execution Levels

• Unit Testing

• Integration Testing

• System Testing

• Acceptance Testing

Ø Test Execution Depends on

• Test Environment

• Test Data

• Test Tools

Ø Test Result Up-dation and Defect Logging

• Test Execution

Ø Test Execution Run plan should be prepared based on criteria below

• Impacted Functionality

• Unstable Functionality

• Prioritization

• Functional Dependency

• Test Case priority and complexity

• Test Execution

• Test Execution

Ø Test Execution Deliverables

• Test Log / Defect Report

• Various Metrics

• Automation scripts execution report

Ø Test Stopping Criteria

• Meet deadline

• Exhaust budget

• Achieved desired coverage

• Achieved desired level failure intensity

• Management

• Test Sign-Off

Ø This phase signifies that testing has been completed in accordance with accepted Test Plan.

Ø Test Manager provides sign off to customer on successful completion of testing.

Ø In case of conditional sign-offs caveats should be mentioned

Ø Various metrics reports are prepared/updated by Test Manager.

Ø Defect Preventions activities should be carried out based on UAT or production defects.

• Traceability Matrix

Ø A document showing he relationship between Test Requirements and Test cases

Ø From Traceability Matrix, we can check that which requirements are covered in which test cases and “particular test case covers which requirements”

Ø In this matrix, the rows will have the requirements.

Ø For every document {HLD, LLD etc}, there will be a separate column.

Ø So, in every cell, we need to state, what section in HLD addresses a particular requirement. Ideally, if every requirement is addressed in every single document, all the individual cells must have valid section ids or names filled in.

Ø In case of any missing of requirement, we need to go back to the document and correct it, so that it addressed the requirement.

No comments:

Post a Comment